According to Google Association rule learning is a well researched method for discovering interesting relations between variables in large databases. For example, the rule  found in the sales data of a supermarket would indicate that if a customer buys onions and potatoes together, they are likely to also buy hamburger meat.

found in the sales data of a supermarket would indicate that if a customer buys onions and potatoes together, they are likely to also buy hamburger meat.

An Association rule is patter that states when an event occur, another event will occur with certain probability.They are the if/then statements that helps to find the relationship between objects which are frequently used together. For example if a customer buys milk then he may also buy cereal or if a customer buy tablet or a computer,he may also buy a pen drive or a hard disk.

There are 2 basic criteria that association rule uses

SUPPORT: The support is sometimes expressed as a percentage of the total number of records in the database.Suppose we have two baskets T1= {A,A,C} ,T2={A,X}, where A,C & X are the item sets, the support count of an item-set is always calculated with respect to the number of transactions which contains the specific items-set. So

They are used to identify the relationship and rules generated by analysing for frequently used if/then pattern.

Below is how we calculate Support Confidence and Lift.

found in the sales data of a supermarket would indicate that if a customer buys onions and potatoes together, they are likely to also buy hamburger meat.

found in the sales data of a supermarket would indicate that if a customer buys onions and potatoes together, they are likely to also buy hamburger meat.An Association rule is patter that states when an event occur, another event will occur with certain probability.They are the if/then statements that helps to find the relationship between objects which are frequently used together. For example if a customer buys milk then he may also buy cereal or if a customer buy tablet or a computer,he may also buy a pen drive or a hard disk.

There are 2 basic criteria that association rule uses

SUPPORT: The support is sometimes expressed as a percentage of the total number of records in the database.Suppose we have two baskets T1= {A,A,C} ,T2={A,X}, where A,C & X are the item sets, the support count of an item-set is always calculated with respect to the number of transactions which contains the specific items-set. So

- the absolute support of A, i.e. the absolute number of transactions which contains A, is 2

- the relative support of A, i.e. the relative number of transactions which contains A, is

22=1

They are used to identify the relationship and rules generated by analysing for frequently used if/then pattern.

Below is how we calculate Support Confidence and Lift.

For example, if a supermarket database has 100,000 point-of-sale transactions, out of which 2,000 include both items A and B and 800 of these include item C, the association rule "If A and B are purchased then C is purchased on the same trip" has a support of 800 transactions (alternatively 0.8% = 800/100,000) and a confidence of 40% (=800/2,000). One way to think of support is that it is the probability that a randomly selected transaction from the database will contain all items in the antecedent and the consequent, whereas the confidence is the conditional probability that a randomly selected transaction will include all the items in the consequent given that the transaction includes all the items in the antecedent.

Lift is one more parameter of interest in the association analysis. Lift is nothing but the ratio of Confidence to Expected Confidence. Expected Confidence in this case means, using the above example, "confidence, if buying A and B does not enhance the probability of buying C." It is the number of transactions that include the consequent divided by the total number of transactions. Suppose the number of total number of transactions for C are 5,000. Thus Expected Confidence is 5,000/1,00,000=5%. For our supermarket example the Lift = Confidence/Expected Confidence = 40%/5% = 8. Hence Lift is a value that gives us information about the increase in probability of the "then" (consequent) given the "if" (antecedent) part.

A lift ratio larger than 1.0 implies that the relationship between the antecedent and the consequent is more significant than would be expected if the two sets were independent. The larger the lift ratio, the more significant the association.

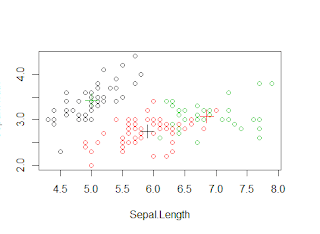

In the Next article will explain how to do it with R.